Scalable AI Incident Classification

by Simon Mylius and Jamie Bernardi

Summary

A number of AI incident databases exist, but their data is inconsistently structured. I’ve developed a tool to structure AI incident data and presented the results with a dashboard, to help policymakers make evidence-based decisions. The tool classifies real-world, reported incidents by risk category and harm caused, aiming to reveal information about the impacts of AI on society that is currently opaque.

Using an LLM, the tool processes raw incident reports, providing a scalable methodology that could be applied cost-effectively across much larger datasets as numbers of reported incidents grow.

I classified all incidents in the AI Incident Database according to the MIT Risk Repository’s causal and domain taxonomies. I then assigned scores for harm-severity on 10 different dimensions based on the CSET AI Harm Taxonomy, using a scale I developed to reflect impact from zero to 'worst-case catastrophe'.

The outputs are a structured dataset and a dashboard that you can explore through a graphical web interface to identify trends and insights.

Scope of work

This project is intended as a proof of concept to explore the potential capabilities and limitations of a scalable incident analysis process, investigating the hypothesis that it could address the needs of policymakers by providing quantitative information on which to base policy decisions. To learn how it could be improved and to demonstrate value, I have made the work-in-progress dashboard available online and encourage users to explore the dashboard and share feedback - all welcome, but we are particularly keen to hear from people involved in policymaking or who may be able to use this type of tool in other ways.

Feedback you provide will serve as one input to our further research. We intend to thoroughly explore real-world use-cases for the tool, and rigorously evaluate the validity of its results, helping us decide whether to take the concept forward.

Why incident reporting?

Incident reporting processes are used in many high-hazard industries, like healthcare, aviation and cybersecurity. These reporting processes were created for different purposes, but each fundamentally aims to improve safety outcomes through either facilitating safety learning or establishing accountability for incidents (Wei and Heim, forthcoming).

Safety learning often means noticing empirical patterns and trends, to develop theories about how a technology and its interaction with the world is changing.

Establishing accountability for an incident involves some actor reporting information about an incident that enables further investigation to understand how the entities responsible contributed to the harm caused. These processes can often involve mandatory reporting.

A case laying out the potential benefits of incident monitoring for AI is made in this report from the Centre for Long-Term Resilience, which describes currently unrealised benefits of incident reporting – primarily gaining “visibility of risks in real world contexts”: Pre-deployment tests of frontier AI systems fall short in identifying systemic risks because modelling the societal context is challenging, as is the quantification of potential aggregated harm. Certain kinds of incident reporting might set high thresholds for harm/damage, and these are best suited to preventing decisive, catastrophic accidents (assuming we get warning shots). Other kinds of incident reporting track smaller risks that aggregate into large societal risks. This paper makes recommendations for governments on post-deployment monitoring of AI, including incident reporting, and references a number of incident reporting mechanisms that have been proposed such as developing an ombudsman for citizens to report AI harms, mandating reporting for major AI incidents, and collating AI-related incidents from sector-specific regulators.

The effectiveness of an incident reporting process in achieving its goal depends on how it is designed, implemented and used. Learning from incidents requires data to have structure, meaning it follows a schema where data fields and classifications are used consistently across the entire dataset, removing ambiguity in the meaning of the information contained in the database. The design of the process and structure of the data should be informed by the reporting process' purpose. Without safety learning, incident numbers would increase as AI systems are deployed ever-more-widely: a scalable method of structuring data from reports and classifying incidents will be necessary if we wish to effectively use the data to improve safety outcomes.

Why isn’t incident reporting impacting AI policy today?

On the premise that incident reporting has improved safety outcomes in other domains, a number of AI incident databases have been created, including the AI Incident Database, the OECD’s AI Incident Monitor, the AIAAC Repository and the Political Deepfakes Database amongst others. However, few lessons have yet been drawn from them, and they are limited in scope.

This section explains two current problems with AI incident reporting processes - unstructured data and a lack of clarity on how incident reporting could be used to inform policy. It then argues for an iterative approach to resolving these issues.

A) Policymakers do not have access to structured AI incident report data to inform evidence-based decision making

Available AI incident databases are currently limited. Because they rely on voluntary reporting, only a handful of incidents are reported, and details are filled inconsistently.

Furthermore, these databases aren’t taxonomised consistently. It is therefore impossible to conduct certain analyses across broad datasets, like filtering on a specific risk domain to observe patterns or trends. Furthermore, whilst some incidents have been assigned an overall ‘severity’ rating in current databases (example), there is no objective methodology in use to assess the severity of harm across different AI incident categories (e.g. physical harm, financial loss, environmental damage, loss of privacy).

Consistent classification of incidents and harm severities would enable richer analysis and support future root cause analysis work, helping policymakers understand how AI incidents happen. Once the prevalence, causes and impact of specific harms are understood, the most significant risk areas can be selected and the most effective interventions prioritised.

B) Before public investment is made in incident monitoring, a deeper understanding of policy requirements is needed

Incident reporting processes depend on what follow-up actions they are intended to inform (e.g. learning or accountability) - policymakers' needs are not monolithic. In turn, the most effective way to structure and present incident data depends on how it is used in these processes. Little work has been done to designate clear users of these databases, nor has it been done to understand their needs. A user-focused investigation would be valuable, to assess whether the data being collected by current databases adequately answers specific questions for different actors involved in policymaking. If not, where are the gaps?

Investigating policy requirements could also discover needs for incident reporting processes that differ greatly from current approaches, like mandatory reporting regimes that capture the most severe incidents and facilitate in-depth investigations. However, processes like that face other systemic problems: apart from in extremely strict regimes (like aviation), near misses go unreported. Secondly, it’s difficult to use incident reporting to prevent severe incidents and catastrophic failures because we don’t have examples of them before they happen. Thirdly, it’s difficult to designate ‘what counts’ as an AI incident, as AI is usually a tool embedded in a specific domain (like health, transport, etc). Exploring the wide range of possibilities for AI incident monitoring processes to increase public safety is a topic of ongoing research (see these aforementioned by CLTR and CSET for examples).

One path forward for AI Incident reporting

Despite and because of these problems with existing incident reporting databases, little effort has been put into comprehensively populating them, leaving the data incomplete. This is a cyclical problem: before value is demonstrated, this effort won’t be justified, and value is difficult to demonstrate with limited data. Thus, AI incident reporting today provides little value to the AI policy discussion.

We aim to address these problems by deeply understanding policymakers’ needs when drawing on AI-related incidents then, using that understanding, build a tool that provides structure to existing incident data to aid real policy decisions.

User Stories to understand policymakers’ needs for incident reporting

In software and systems engineering, user requirements are often captured in the form of ‘user stories’. This is a key component of the user-centric Agile methodology, intended to keep product development focused on the real value of features to end-users.

We intend to use user stories to identify policymakers’ needs for incident reporting (or lack of). To that end, we’ve collected some preliminary user stories from policy professionals and have created some additional hypothetical examples to illustrate some other potential uses. We will be conducting structured interviews in the next phase of this work in order to use user stories to specify real problems policymakers face, and verify that our approach solves them.

Exploring the feasibility and validity of using AI tools to process incident reporting data

In this work we use AI tools to scale incident monitoring processes. Despite user research being incomplete, we anticipated this to be a requirement for a few reasons:

The volume of incidents reported could rise dramatically as the numbers of deployed AI systems and users grow.

The breadth of application areas in which AI is applied results in a very wide range of types of reports, lacking in structure and standardisation. Drawing out the role that AI played in each incident would take significant effort for a human reviewer, and in some cases specialised knowledge.

By developing a process that accommodates incident reports from a wide range of domains, overall costs are lower than with a number of separate processes, each targeting a narrower domain area. It also allows us to identify systemic patterns, which might pervade in incidents across many domains.

With this in mind, it would be useful to know whether AI-based tools could be used to process incident data reliably, achieving greater scale for lower costs than analysis by human teams. This has not been demonstrated to date. Demonstrating the capabilities and limitations of a proof-of-concept (scalable) incident classification system would highlight which, if any, policymaker requirements it addresses. This would help build an understanding of the potential value of this type of tool, enabling policymakers to make better informed investment decisions.

Introducing a tool for Scalable AI Safety Incident Classification

Important caveat - Validity of analysis

This analysis is currently a proof of concept exploring the potential capabilities and limitations of a scalable incident analysis process. Validity and reliability of the tool haven’t been fully evaluated yet.

We currently classify reports from the AI Incident Database (AIID, described below). AIID relies on submissions from the public: the objectivity and amount of detail in the reports varies across the dataset. As the reporting is voluntary, the dataset may be subject to different kinds of reporting bias. For example, as the frequency of certain types of incident increases, the issue can be desensitised and the proportion of these incidents that are reported decreases.

We have iterated the methodology to improve the classifications’ reliability and validity, but the tool still misclassifies some incidents and there remain further opportunities for improvement. Preliminary investigation into misclassifications through ‘spot-checks’ suggests that in the order of ~20% of incidents may have been assigned a harm severity rating that varies by 1 or 2 points from that assigned by a human evaluating the reports. Unsurprisingly, variance occurs more frequently in qualitative categories - which depend more on the semantics of the reports - than quantitative ones. Some incidents had a much wider disagreement between tool and human severity ratings - in most cases, because the tool’s reasoning is exposed (discussed later), the flaws in reasoning can be understood, suggesting errors could be reduced with automated checking.

A further study is now underway to validate user needs and the quality of analyses to inform further improvements to the tool.

Until this work has progressed, and until data in the AIID is more comprehensive: Patterns and trends observed in the data should be taken as illustrative.

Inputs

AI Incident Database (AIID)

The AI Incident Database is a set of reports for ~800 incidents, submitted by the public and curated by a human team. Although some incidents are categorised according to the CSET harm taxonomies (v0 and v1) and a Goals, Methods, Failures taxonomy, no single taxonomy is applied comprehensively to all incidents across the full dataset.

Example reported incidents:

Incident 573: Deepfake Recordings Allegedly Influence Slovakian Election

Incident 655: Scams Reportedly Impersonating Wealthy Investors Proliferating on Facebook

Our tool processes all of the raw reports from the database, as well as some of the data compiled by the AIID team: e.g. incident date, alleged developer, alleged deployer, alleged entity harmed.

MIT AI Risk Repository

The MIT AI Risk Repository “builds on previous efforts to classify AI risks by combining their diverse perspectives into a comprehensive, unified classification system”. My tool uses the repository's Causal Taxonomy (which ascribes the ‘Entity’ that took the harmful action, the ‘Intent’ behind the harmful outcome, and the ‘Timing’ of the incident in the deployment cycle) and Domain Taxonomy (which ascribes the risk’s domain and subdomain)

Causal and Domain Taxonomies from MIT AI Risk Repository

Harm Severity Scales

To my knowledge, there’s no appropriate rubric for ascribing severity to a taxonomy of AI harm across multiple categories including systemic harm. (The UK National Risk Register uses an impact scale with 7 categories but does not publish criteria for assessing harms such as loss of privacy, differential treatment, harm to civil rights etc). The MIT Domain Taxonomy presented above is focused on types of risk rather than types of harm and so does not include specific categories for some of the types of harm that policymakers would likely be interested in evaluating - such as physical harm (including loss of life), financial loss and damage to property. I therefore used the CSET AI harm taxonomy to create a Harm Severity Scale across 10 categories with quantifiable criteria to support objective assessment.

A review of the harm severity scale will be conducted as part of follow-up work to ensure it supports specific user-requirements.

Incident Classification

The tool processes the input incident reports in 2 stages:

Classifying the associated risk according to the MIT AI Risk Repository taxonomies

Assigning harm severity ratings across each of the categories

Risk Classification

The tool identifies how the harm came about and what type of harm it is. Specifically, the tool uses the MIT Risk Repository’s Causal and Domain/Subdomain classifications. For each, a short statement is generated to explain why the chosen categories were selected. This provides some traceability and in the case of any misclassification, insight into how it arose.

Example:

Harm Severity Rating

The tool identifies explicitly reported, direct and indirect harm in each of the 10 categories used by the harm severity rating system, focusing on quantified details like injury or economic damage in dollar amounts. Using the severity rating scale, a score is assigned for each category of harm.

Besides explicitly reported harm, the model assesses whether additional harm was likely caused and reports this separately as ‘inferred harm’ that may be worthy of further investigation. For each inferred harm, a short statement is generated to explain the score that has been assigned, providing some traceability

Example:

Addressing Low-Quality Information

Sometimes, reports lack sufficient information to reliably classify and score the incident. To identify these instances, I programmed the tool to report a confidence level alongside its output classifications, based on the information available in the reports, and to highlight missing information.

This allows users to exclude incidents from their analysis where the validity of the classification is less certain, or where important information is likely missing. These statistics can also be aggregated to assess the quality of the dataset as a whole.

Confidence in Assessment: The tool generates a confidence rating for the analysis of each incident (High/Medium/Low), providing reasoning for the rating and identifying areas of uncertainty in the output. This is intended to flag incidents to allow their exclusion from analysis or where further investigation would be recommended before taking action based on the analysis.

Missing Information: For each incident, the tool assesses whether there was sufficient information in the reports to classify the incident and generate harm severity scores. It is prompted to identify any additional information that would have improved the quality of, or confidence in the analysis. This information may be useful in informing the design of new incident reporting processes.

Example:

Outputs

Update: The outputs discussed in this post were based on analysis conducted in October 2024. The live dashboards have been updated since then, to include the latest reports from the AI Incident Database, so the figures below may vary from live data.

Categorising incidents and assigning harm scores has allowed us to draw out some initial insights which - with more complete input data - may be of the sort that has implications for AI policy.

Whilst the patterns they identify are interesting, these results should only be taken as illustrative because of the aforementioned limitations in the datasets used and because validation studies are still underway. We hope they nonetheless demonstrate how certain identified trends could raise questions like “why are harms from malicious actors seemingly increasing?” (output 2)

Output 1 - Risk Domain Distribution

The domain with most reported incidents is:

7 AI system safety, failures, & limitations (30%)

followed by1 Discrimination and Toxicity (23%)

Within domain 7 (AI system safety, failures, & limitations), the vast majority of reported incidents were in the sub-domain:

7.3 Lack of capability or robustness (227 of 240)

Preliminary analysis suggests that a high proportion of these are associated with reported defects in autonomous vehicles - this may be due to reporting bias as safety issues in self-driving cars have been considered particularly newsworthy. This merits further investigation.

The number of incidents within the Socioeconomic & Environmental domain is relatively low compared with other categories. This may be accounted for in part by some overlap or ambiguity with domain 1 (discrimination-related risks) and domain 4 (economic loss caused by malicious actors) - worth further exploration.

Output 2 - Temporal Trends

The proportion of reported incidents in the domains 3 Misinformation and 4 Malicious Actors has grown every year since 2020.

Both were small minority domains prior to 2020 and they now form the 2 most significant domains by proportion

Year 2024 to date: 3 Misinformation: 31% of reported incidents

Year 2024 to date: 4 Malicious Actors: 25% of reported incidents

The proportion of all reported incidents where the cause was Intentional has grown by at least 5% every year since 2020

Note: The relative decrease of incidents in domain 7 AI system safety, failures and limitations is caused by overall numbers of incidents increasing, whilst the number within this category remains fairly stable. This draws attention to the fact that reporting bias will affect these distributions - society and the media may become desensitised to certain types of incident if they are very widespread and stop reporting them.

Using the tool to drill into these groupings by filtering on Risk Domain, it is possible to build a qualitative picture of the types of incidents within each category for example:

Many of the 2024 incidents classified within the Misinformation category involve deepfake content intended to influence election results:

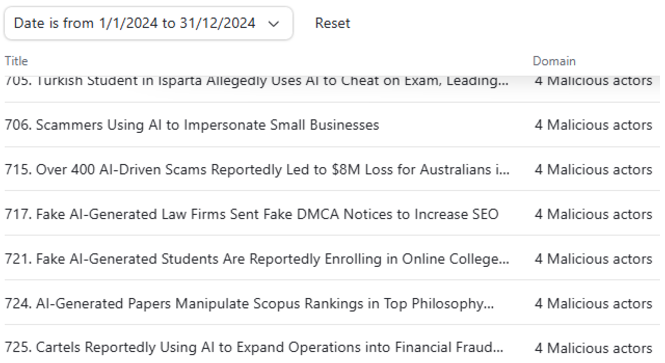

Many of the 2024 incidents associated with Malicious Actors involve scams, fraud or students cheating:

The following sub-domains have shown significant increases as a proportion of reported incidents over the past few years:

3.1 False or misleading information

4.3 Fraud, scams and targeted manipulation

Output 3 - Trends in High Severity Incidents

Within incidents where at least one harm category was rated 6 or more out of 10, the proportion in domains 3 Misinformation and 4 Malicious Actors has increased significantly over the past few years.

Sharp increases in severe incidents would demand urgent policy action on AI. Such graphs - well backed-up - could help to inspire action. For example, Epoch’s graph of compute trends, alongside capability evaluations, helped to justify policy choices like the use of compute thresholds to designate the latest, most powerful AI systems.

Looking just at incidents with a medium/high severity rating in the category of Harm to Civil Rights, 12 incidents out of a total of 24 in the current year to date were classified in the 4 Malicious Actors domain.

It’s worth noting that when exploring higher severity risks, the numbers of incidents are much lower as a proportion of the dataset and therefore more prone to sampling bias. Patterns observed should be followed up with additional validation.

Explore more trends with the Web Interface

To explore more results and trends yourself, I made a dashboard, broken out as:

Home: Risk Classification - distribution of incident classifications (causal and domain) across the entire dataset

Record View - classification and harm severity scores for each individual record, including summary of reasoning and confidence in analysis. This supports filtering by any combination of data fields, thereby supporting the investigation of a wide range of hypotheses.

Timeline: Risk Classification - distribution of incident classifications by year

Timeline: Sub-domains - distribution of incident sub-domains by year

Timeline: High Severity Incidents - incidents with high direct harm severity scores by year

Timeline: High Severity Multiple Categories - incidents causing severe harm in more than one harm category

Timeline: Direct Harm Caused - distribution of harm severity scores by year

Information Quality: Assessment of confidence in classifications and whether the reports included adequate details.

Clicking through each graph takes you to the full details of each individual record, including all classifications, harm severity ratings and a short summary explaining the reasoning used by the model in its classifications. This provides some traceability and a means to understand how any misclassifications arose which could feed into improvements in methodology to increase reliability of future iterations.

Future Work

This work proves the concept of a scalable incident classification tool and paves the way for future work to explore its usefulness, validity, limitations and potential, which will be focused around the following activities:

User-stories - continue collecting user-stories to refine the tool in order to make it as relevant and useful as possible.

Validation study - compare a sample of incident classifications with human analysis to understand the validity and reliability of the model outputs.

Iterate methodology to improve validity - once a target sample of human classifications is available, the process can be updated and outputs evaluated in order to incorporate changes that improve validity,

Incorporate Root Cause Analysis - the analysis lends itself to working alongside root cause analysis such as Ishikawa (identification of potential contributing causes) and Fault Tree Analysis (deductive analysis of how contributing causes interact in conjunctive/disjunctive combination)

Adapt for other datasets of incident databases - the process could be applied to new datasets of reports to provide additional learning from a wider sample

Explore further insights from the analysis - what real-world policy decisions could insights from this analysis inform?

Link to safety cases and risk assessments - explore how the output of this type of analysis could be used as evidence to update risk assessments or safety cases

Lessons learned for new incident monitoring processes. For example, are there commonalities in missing pieces of information in incident reports, or where analyses have low confidence scores?

The code repository will be made available as open-source to encourage users to evaluate and contribute improvements.

Please feel free to share feedback using this form - this will shape the direction of the work and help to make the tool as useful and relevant as possible.

Conclusion

I have created a tool that enriches existing datasets of incident reports by classifying risks using a common taxonomy, and scoring severity of harm caused. This improves the value of the data for the purposes of safety learning and establishing accountability for harms. Using the tool to analyse the AI Incident Database database provides some demonstrative insights into the distributions and trends of incident classifications and severities. We expect that applying the tool to any dataset of incident reports will improve understanding of patterns in the data.

Preliminary feedback we’ve received from potential users has indicated that the approach holds potential and would be useful if its validity and limitations were clearly understood - the subject of future work. There is no existing tool that presents such data graphically, nor allows users to easily explore and extract insights from data. Further still, the tool provides traceability by assigning confidence scores and justifying its classifications explicitly.

The results of this study are consistent with our original hypothesis (“Incident classification by LLM could address the needs of policymakers by providing quantitative information on which to base policy decisions”) and encourage us to follow up with further work to rigorously capture user-stories and validate the analysis to build understanding on the approach’s real world value to policymakers.

The value and validity of any incident monitoring process will always be constrained by the quality, consistency and relevance of the data being fed into it as inputs. We hope this work will inform the design of new incident reporting systems by providing insights into policymakers’ needs.

Acknowledgements

Thanks to Rebecca Scholefield, Alejandro Ortega and Adam Jones for providing feedback on the draft.